No Search Neutrality, Please

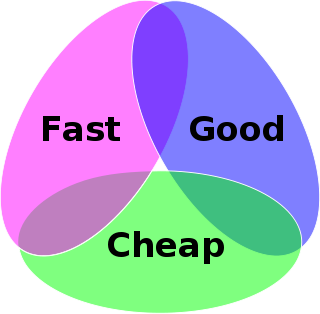

Fairness is always difficult. It is often impossible to achieve in systems where many people make multiple choices. Kenneth Arrow won a Noble Prize in economics, and has an Impossibility Theorem named after him for his work showing how ranked voting systems are impossible to make inherently fair. More precisely his theorem says that when a voting system is trying to rank 3 or more candidates that it cannot optimize different aspects of voting fairness at once. It’s sort of like the old proverb “fast, cheap, good — pick any two” because you can’t optimize all three. Mathematically when more than 3 candidates are being ranked in a vote some aspect of fairness — either ensuring that each vote has similar weight, or that each outcome has a similar chance of happening or some other criteria of ranking fairness — must be infringed.

Every search algorithm, which is another way of ranking choices, is likewise inherently unfair to some of the sources it is ranking. It does not matter whether the search engine is Bing, Ask, Google, or one not yet invented, a ranking of page results cannot be fair in every dimension of fairness. It will be biased in some way, just as every voting system is biased in certain directions.

Nonetheless, we want fairness. Neutrality. Search is both an essential service in our lives today, and also a very big business. News, commerce, identity, and indirectly the principles of freedom flow through the tools of search engines. Their power can be misused. We’ve seen that in China, and we can imagine it in theory. If a search algorithm is inherently biased, is there a way to establish or mold it to be more neutral?

There are many, many people upset with the dominant search engine, Google. They see the biased nature of search. Some are business people whose livelihood was affected by Google’s algorithms; others are activists, pundits, or academics concerned with Google’s dominance. James Grimmelmann has rounded up the best arguments for establishing “neutrality” in search in a very long, very clear, (and very skeptical) technical paper. He distills the arguments into eight proposed search-neutrality principles:

1. Equality: Search engines shouldn’t differentiate at all among websites.

2. Objectivity: There are correct search results and incorrect ones, so search engines should return only the correct ones.

3. Bias: Search engines should not distort the information landscape.

4. Traffic: Websites that depend on a flow of visitors shouldn’t be cut off by search engines.

5. Relevance: Search engines should maximize users’ satisfaction with search results.

6. Self-interest: Search engines shouldn’t trade on their own account.

7. Transparency: Search engines should disclose the algorithms they use to rank web pages.

8. Manipulation: Search engines should rank sites only according to general rules, rather than promoting and demoting sites on an individual basis.

These seem very reasonable expectations to me. Then Grimmelmann throws his grenade:

As we shall see, all eight of these principles are unusable as bases for sound search regulation.

He tries to clarify again:

Just because search neutrality is incoherent, it doesn’t follow that search engines deserve a free pass under antitrust, intellectual property, privacy, or other well-established bodies of law. Search engines are capable of doing dastardly things: According to BusinessWeek, the Chinese search engine Baidu explicitly shakes down websites, demoting them in its rankings unless they buy ads. It’s easy to tell horror stories about what search engines might do that are just plausible enough to be genuinely scary. My argument is just that search neutrality, as currently proposed, is unlikely to be workable and quite likely to make things worse. It fails at its own goals, on its own definition of the problem.

He then refutes each point, which I summarize here:

1. Equality — “Of course Google differentiates among sites -– that’s why we use it. Systematically favoring certain types of content over others isn’t a defect for a search engine–it’s the point.”

2. Objectivity — There is none. “Search users are profoundly diverse. They have highly personal, highly contextual goals. One size cannot fit all…. Who is to say that Yahoo! was right and Google was wrong? One could equally well argue that Google’s low ranking was correct and Yahoo!’s high ranking was the mistake.”

3. Bias — The entire web is biased. “Search SEO, link farms, spam blog comments, hacked websites – you name it, and they’ll try it, all in the name of improving their search rankings. A fully invisible search engine, one that introduced no new values or biases of its own, would merely replicate the underlying biases of the web itself: heavily commercial, and subject to a truly mindboggling quantity of spam.”

4. Traffic — There should be no right to traffic or placement for an incumbent site. If the engine judges the site is not desirable to its users it can change its ranking. “Just as the subjectivity of search means that search engines will frequently disagree with each other, it also means that a search engine will disagree with itself over time.”

5. Relevance — Search engines increase relevance to users by making subjective choices, downgrading or upgrading sites based on what they think their users want, not what the sites want. “Search engines compete to give users relevant results; they exist at all only because they do.” Outside of users, relevance is hard to define and harder to prove, and not useful.

6. Self-interest — Very possible; sometimes happens. Monopolistic self-dealing behavior is also very plausible. “Search-engine critics argue that search engines should disclose commercial relationships that bear on their ranking decisions. This is a standard, sensible policy response.” But says Grimmelmann “This isn’t a neutrality principle, or even unique to search; it’s just a natural application of a well-established legal norm.”

7. Transparency — The sentiment is good, but execution is problematic. If the algorithm is wholly transparent it will be gamed by spammers or worse and rendered useless. If transparent to regulators, they are unlikely to adapt fast enough to bless innovations. “Everything will hinge on their own ability to evaluate the implications of small details in search algorithms. The track record of agencies and courts in dealing with other digital technologies does not provide grounds for optimism on this score.”

8. Manipulation — Seems bad, but “all search results are manipulated and the more skillfully the better.” What everyone is rightly concerned about is “hand manipulation” or manual intervention, or special treatment. “Google itself is remarkably coy about whether and when it changes rankings on an individual basis.” Should spammers get “special treatment”? “Prohibiting local manipulation altogether would keep the search engine from closing loopholes quickly and punishing the loopholers.”

In conclusion, Grimmelmann declares the impossibility theorem. Search engines favor users over websites, and to some (websites) that may seem unfair.

Search neutrality gets one thing very right: Search is about user autonomy. A good search engine is more exquisitely sensitive to a user’s interests than any other communications technology…..Having asked the right question–are structural forces thwarting search’s ability to promote user autonomy?–search neutrality advocates give answers concerned with protecting websites rather than users. With disturbing frequency, though, websites are not users’ friends. Sometimes they are, but often, the websites want visitors, and will be willing to do what it takes to grab them.

Google is not neutral. And it can never be, nor Bing or any search engine. As long as Google favors the user over the commercial interests of websites, and as long as they are reasonable in bridling their own commercial self-interests in favor of the user, then their non-neutrality will keep them going, and keep us happy.