Speculations on the Change of Change

[Translations: Japanese]

The invention of the scientific method, like language and writing, is a major transition in the evolution of technology. But unlike any of the other transitions of the past, the scientific method continues to evolve very rapidly itself. While language is the foundation of all later change (there is no science without language), the basic structure of language has stopped evolving. Science has not. In the long term, the transitions that sprout from the evolution of science will rival the influence of language, because science changes the way change happens.

In fact change in the scientific process is the fastest moving edge of change in the world. It zooms ahead of the speed-of-light shifts we associate with electronic lifestyles, fads and fashion, and the next round of gadgets. We have missed the deep-rooted supernova of change-in-knowing because it has been obscured; we tend to associate the scientific method with academic journals and laboratories, which are mired in the past. But when we consider the scientific method as the generalized way in which we acquire information and structure knowledge, then we can see that it is vast and rapid, outpacing and underlying all other change.

For instance, we are in the process of scanning all the 32 million books published by humans since the time of Sumerian clay tablets till now. Their true value will be unleashed as we hyperlink and cross-reference each idea in their pages – a technique long honored in research but never before practical on the scale of all-books. We have already digitized and linked all law in English, and half of the scholarly journals released in the last 25 years. This digitization enables machine translation to move knowledge from obscure languages to common ones. It enables text mining to discover patterns found in the library of libraries that cannot be seen book by book. These are but two small points in the transformation of information technology. We see daily accelerations in bandwidth, storage and search – each step hyped by glossy magazines and web blogs which marks them as amusements and diversions, which they are not. The entire frontier of computers, hyperlinks, wikis, search indexes, RFID tags, wi-fi, simulations, and the rest of the techno goodie bag are in fact reshaping the nature of science. They are tools of knowledge. First these innovations change what we know, and then they change how we know. Then they change how we change.

Not only will science continue to surprise us with what it discovers and creates, it will continue to modify itself so that it surprises us by new methods. At the core of science’s self-modification is technology. New tools enable new structures of knowledge and new ways of discovery. The scientific method 400 years from now will differ from today’s understanding of science more than today’s science method differs from the proto-science used 400 years ago. As in biological evolution, new organizations are layered upon the old without displacement. The present scientific methods are not jettisoned; they are subsumed by new levels of order.

A sensible forecast of technological innovations in the next 400 years is beyond our imaginations (or at least mine), but we can productively envision technological changes that might occur in the next 50 years. Some of these technologies will be self-enabling technologies that will alter the scientific method – that is, new technologies engineered to find and develop other new technologies; new knowledge assembled to find and develop other new knowledge in new ways.

Based on the suggestions of the observers above, and my own active imagination, I offer the following as possible near-term advances in the evolution of the scientific method.

****

Compiled Negative Results – Negative results are saved, shared, compiled and analyzed, instead of being dumped. Positive results may increase their credibility when linked to negative results. We already have hints of this in the recent decision of biochemical journals to require investigators to register early phase 1 clinical trials. Usually phase 1 trials of a drug end in failure and their negative results are not reported. As a public heath measure, these negative results should be shared, so journals have pledged not to publish the findings of phase 3 trials if their phase 1 results had not been reported, whether negative or not.

Triple Blind Experiments – All participants are blind to the fact of the experiment during measurement. While ordinary life continues, massive amounts of data are drawn and archived. From this multitude of measurements, controls and variables are identified and “isolated” afterwards. For instance, the vital signs and lifestyle metrics of a hundred thousand people might be recorded non-invasively for 20-years, and then later analysis could find certain variables (smoking habits, heart conditions) that would permit the entire 20 years to be viewed as an experiment – one that no one knew was even going on.

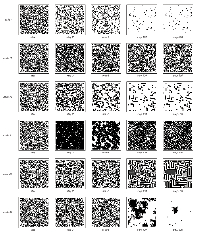

Combinatorial Sweep Exploration – Much of the unknown can be explored by systematically creating random varieties of it at a large scale. You can explore a certain type of ceramic by creating all possible types of ceramic, and then testing them. You can explore certain realms of proteins by generating all possible variations of that type of protein and they seeing if they bind. You can discover new algorithms by programming automatically all possible programs and then running them. Indeed all possible Xs of almost any sort can be summoned and examined as a way to study X. The parameters of this “library” of possibilities become the experiment. With sufficient computational power, together with a pool of proper primitive parts, vast territories unknown to science can be probed.

Evolutionary Search – A combinatorial exploration can be taken even further. If new libraries of variations can be derived from the best of a previous generation of good results, it is possible to evolve solutions. The best results are mutated and bred toward better results. The best testing protein is mutated randomly in thousands of way, and the best of that bunch kept and mutated further, until a lineage of proteins, each one more suited to the task than its ancestors, finally leads to one that works perfectly. This method can be applied to computer programs and even hypothesis.

Multiple Hypothesis Matrix – Instead of proposing a single hypothesis, a matrix of hypothesis scenarios are proposed and managed. Many of these hypothesis may be algorithmically generated. But they are entertained simultaneously. An experiment travels through the matrix of multiple hypothesis, and more than one thesis is permitted to stand with the results. The multiple thesis are passed onto the next experiment.

Pattern Augmentation – Software to aid in detecting patterns of results. Curve-fitting software which seeks out a pattern in statistical information is a precursor. But in large bodies of information with many variables, algorithmic discovery of patterns will become necessary and common. These exist in specialized niches of knowledge (such particle smashing) but more general rules and engines will enable pattern seeking tools to become part of all data treatment.

Adaptive Real Time Experiments – Results evaluated, and large-scale experiments modified in real time. What we have now is primarily batch-mode science. Traditionally, the experiment starts, the results collected, and conclusions reached. Then the next experiment is designed in response, and launched. In adaptive experiments, the analysis happens in parallel with collection, and the intent and design of the test is shifted on the fly. Some medical tests are already stopped or re-evaluated on the basis of early findings; this method would extend that method to other realms. Proper methods would be needed to keep the adaptive experiment objective.

AI Proofs – AI to check the logic of experiment. As science experiments become ever more sophisticated and complicated, they become ever more difficult to judge. Artificial expert systems will at first evaluate the scientific logic of a paper to ensure the architecture of the argument is valid, and that it publishes the required types of data. This will augment the opinions of editors and peer-reviewers, but over time as the protocols for an AI check became standard, AI can score many papers for certain consistencies.

Wiki-Science – Experiments involving thousands of investigators collaborating on a “paper.” The paper is ongoing, and never finished. It is really a trail of edits and experiments posted in real time to an evolving “document.” Contributions are not assigned. The average number of authors per paper continues to rise. With massive collaborations, the numbers will boom. Tools for tracking credit and contributions will be vital.

Defined Benefit Funding – The use of prize money for particular scientific achievements. A goal is defined, funding secured for the first to reach it, and the contest opened to all. This method can also be combined with prediction markets, which wager on possible winners, and can liberate further funds for development.

Zillionics – Ubiquitous 24/7 sensors in bodies and environment will transform medical and environmental sciences. Unrelenting rivers of sensory data will flow day and night from zillions of sources. The exploding number of new, cheap, wireless, and novel sensing tools will require new types of programs to distill, index and archive this ocean of data, as well as to find meaningful signals in it. The field of “zillionics” — dealing with zillions of data flows — will be essential in health, natural sciences, and astronomy.

Deep Simulations – As our knowledge of complex systems advances, we can construct more complex simulations of them. Both the success and failures of these simulations will help us to acquire more knowledge of the systems. Developing a robust simulation will become a fundamental part of science in every field. Indeed the science of making viable simulations will become its own specialty, with a set of best practices, and an emerging theory of simulations. And just as we now expect a hypothesis to be subjected to the discipline of being stated in mathematical equations, in the future we will expect all hypothesis to be exercised in a simulation.

Hyper-analysis Mapping – Just as meta-analysis gathered diverse experiments on one subject and integrated their (sometimes contradictory) results into a large meta-view, hyper-analysis creates an extremely large-scale view by pulling together meta-analysis. The cross-links of references, assumptions, evidence and results are unraveled by computation, and then reviewed at a larger scale. Hyper-mapping tallies not only what is known in a particular wide field, but also emphasizes unknowns and contradictions. It is used to spot light ‘white spaces” where additional research would be most productive.

Return of the Subjective – Science came into its own when it managed to refuse the subjective and embrace the objective. The repeatability of an experiment by another, perhaps less enthusiastic, observer was instrumental in keeping science rational. But as science plunges into the outer limits of scale – at the largest and smallest ends – and confronts the weirdness of the fundamental principles of matter/energy/information, it may not be able to ignore the role of observer. Existence seems to be a paradox of self-causality, and any science exploring the origins of existence will eventually have to embrace the subjective, without become irrational. The tools for managing paradox are still undeveloped.