The Arc of Complexity

“Everyone knows” that evolution has become more complex over the past 3.8 billion years. One species has spawned 30-100 million species. Organelles, multicellularity, tissues, and social systems have all appeared in life forms over this span. And everyone knows that technology has increased that complexity further. It is obvious that the mechanical complexity of the technium has increased in our lifetimes, if not in the last hour. The only problem with this conventional wisdom: we have no idea what complexity is, or how to define it.

What’s more complex, a cucumber or a Boeing 747? The answer is unknown. We have no way to measure the difference in order and organization between the two and don’t have good working definition of complexity to even frame the question. Seth Lloyd, a quantum physicist at MIT, has counted 42 different mathematical definitions of complexity. Most of them are not universal (they only work in small domains not across broad fields like life and technology), and most are theoretical (you can’t actually use them to measure anything in real life.) They are more like thought experiments.

Yet we have an intuitive sense that “complexity” exists, and is increasing. The organization of a dog is much more complicated that a bacteria, but is it ten times more complicated, or a million times? Most attempts to distinguish complexity aim at the degree of order in the system. A crystal is highly ordered to the point that a large hunk of diamond could be described mathematically with a small set of data points: you take carbon and repeat it X times in configuration Y over three dimensions. A bigger piece of diamond (or any crystal) has the same repeating “order” just extended further in space. Its structure is very predictable, and therefore simple, or low in complexity. What could be simpler than a homogenous bit of stuff?

On the other hand, a heterogeneous, mixed-up piece of granite, or a piece of plant tissue, would offer less predictable order (you could not determine what element the adjacent atom would be), and therefore more complexity. The least ordered, least predictable things we know of are random numbers. They contain no expected pattern, and therefore by this logic (less order = more complexity) they would be the most complex things we know of. In other words a messy, randomly ordered, chaotic house would be more complex than a tidy, well-ordered house. For that matter, a chopped up piece of hamburger would be more “complex” that the same hunk of meat in the intact living cow. This does not ring true for our intuitive sense of complexity. Surely complexity must reflect a certain type, a special kind of order/disorder?

Crystals just repeat the same pattern over and over again. So an extremely highly ordered sequence, like the repeating pattern in a crystal, can be reduced to a small description. A trillion digits of this sequence, 0101010101010…, can be perfectly compressed, without any loss of information, into one short sentence with three commands: print zero; then one, repeat a trillion times. On the other hand a highly disordered sequence like a random number cannot be reduced. The smallest description of a random number is the random number itself; there is no compression without loss, no way to unpack a particular randomness from a smaller package than itself.

But the problem with defining randomness as the peak of complexity is that randomness doesn’t take you anywhere. The “pattern” has no depth. It takes no time to “run” it because nothing happens while it runs. A highly ordered sequence, such as 0101010101010 doesn’t go far either, but it goes further than randomness. You at least get a regular beat. A meaningful measure of complexity then would reckon the depth of pattern in the system. Not just its order, but its order in time. You could measure not only how small the system could be compressed (more compression = less complexity), but how long the compression would take to unpack (longer = more complexity). So while all the complicated variations, and unpredictable arrangements of atoms that make up a blue whale can be compressed into a very tiny sliver of DNA code (high compression = low complexity), it takes a lot of time and effort to “run” out this code (high complexity). A whale therefore is said to have great “logical depth.” The higher complexity ranking of a random number is shallow compared to the deeper logical complexity of a complicated structure in between crystalline order and messy chaos.

“Logical depth” is a good measure for strings of code, but most structures we care about, such as living organisms or technological systems, are embodied in materials. For instance, both an acorn and an immense 100-year old oak tree contain the same DNA. The code held by tree and its seed can both be compressed to the same minimal string of symbols (since they have exactly the same DNA), therefore both structures have the same logical depth of complexity. But we sense the tree – all those unique crenulated leaves and crooked branches — to be more complex than the acorn. So researchers added the concept of “thermodynamic depth” in quantifying complexity. This metric considers the number of quantum bits that are flipped, or used, in constructing the fully embedded string of code. It measures the total quantum energy and entropy spent in making the code physical, either in a small acorn or, with more energy and entropy, in a majestic spreading tree. So a tree with more thermodynamic depth has more complexity than its acorn.

But when it comes to quantifying the complexity of a cucumber versus a jet these theoretical measures don’t help a lot. Most artifacts and organisms carry large hunks of useless, insignificant, random-like parts that raise the formal complexity quotient, but don’t really add complexity in the way we intuitively sense it. The DNA string of the cucumber (and all organisms) appears to be overrun with non-coding “junk DNA” while most of the atoms of a 747 – in its aluminum – are arranged purely at random, or at best in mini-crystals. Real objects are a grand mixture of chaos and order, and tend to hover in a sweet spot between the two.

It is precisely that goldilocks state between predictable repeating crystalline order and messy chaotic randomness that we feel captures real complexity. But this “neither-order-nor-non-order” state is so elusive to measurement that Seth Lloyd once quipped that “things are complex exactly when they defy quantification.” However Lloyd, together with Murray Gell-Mann, another quantum physicist, devised the 42nd definition of complexity in the latest attempt to quantify what we sense. Since randomness is so distracting, producing “shallow” complexity, they decided to simply ignore it. Their measurement, called effective complexity, formally separates the random component of a structure’s minimal code and then measures the amount of regularities that remain. In effect it measures the logical depth after randomness is subtracted from the whole. This metric is able to identify those rare systems (out of all possible systems) that cannot be compressed, yet are not random. An example in real life might be a meadow. Nothing much smaller than a meadow itself could contain all the information, subtle order and complexity of myriad interacting organisms making up a meadow. Because it is incompressible, a meadow shares the high complexity quotient of shallow randomness; but because its irreducibility is not due to randomness, it owns a deep complexity that we appreciate. That difference is captured by the metric of effective complexity.

We might think of effective complexity as a mathematical way to quantify non-predictable regularities. DNA itself is an example of non-predictable regularity. It is often described as a non-periodic crystal. In its stacking and packing abilities it shares many predictable regularities of a crystal, but rare among crystals, it is non-repeating (non-periodic) because each strand can vary. Therefore it has a high effective complexity.

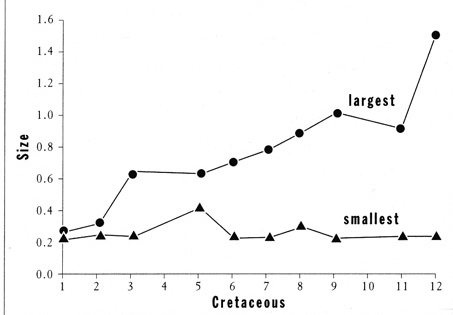

But the increase in effective complexity in life’s history is not ubiquitous, and not typical. While a rise in complexity can be seen retrospectively in the broad lineage of life, it usually can’t be seen up close in a typical taxonomic family. There’s no factor associated with complexity that will consistently gain across all branches of life all the time. Scientists intuitively expected larger and later organisms to acquire more genes. In broad strokes that can be true, but on the other hand a lily plant or ancient lungfish both have 40 times the DNA base pairs than humans. Many “laws of acceleration” have been proposed for evolution and all of them disappear when inspected quantitatively in a specific range. One of the earliest laws proposed, Cope’s Rule, posited in the 1880s, says that over time life evolved larger bodies. While a trend toward large body size is true within some lines (notably dinosaurs and horses), it is not true of life as a whole (we are much smaller than Tyrannosaurus Rex), and it is not true for all dinosaur lineages, or all branches of ancestral horses. More importantly, in most orders of life, the largest organisms may tend to grow larger, but the mean size of the organisms remains constant. The smallest stay small. Stephen Gould interprets this as “random evolution away from small size, not directed evolution toward large size.”

A second proposed universal law says that over evolutionary time longevity increases for the lifespan of a species. Over time, species are more stable. And in fact at certain epochs in the past, the longest-lived species did live longer. But the mean longevity did not increase. This pattern is repeated throughout the tree of life. Maximum diversity increases, but the mean diversity does not. Maximum brain size increases in many animals over time, but the mean brain size does not. When we apply the feeble measurements we have for complexity, we find that maximum complexity increases over evolutionary time, but mean complexity does not.

Typical pattern of increasing size among foraminifera (similar to plankton)

Nowhere is this more evident that in the makeup of the bulk of primeval life on earth. Life began 3.8 billion years ago, and for the first half of that time — its first two billion years — life was bacterial. This first half of life’s show was the Age of Bacteria, because bacteria were the only life.

Today, in an expanded biosphere crammed with 30-100 million species, bacteria still make up the bulk of life on earth. [ck] Maverick scientist Thomas Gold estimates that there is more bacterial mass living in the crevices of solid rock in the earth’s crust than there is in all the fauna and flora on earth’s surface. Some estimates put the total bacterial biomass (in terms of sheer weight) within soil, oceans, rocks, and in the guts of animals to be 50% of all life on this planet. Bacteria are also significantly more diverse then visible life. The bacterial world is where gene hunters go to find unusual genes for drugs and other innovations. There is nowhere bacteria have not colonized. Bacteria thrive in more extreme environments – cold, dry, pounding deep pressure, scorching heat, total darkness, toxic elements, radiation intense – than any other kind of life. They produce most of the oxygen for the planet. They underpin most ecosystems. They dwarf the rest of life in genemonic variety. The bacteria breeding between the interstitials of loamy soil and in the depths of the ocean and in the warm tub of your own intestines are all as highly evolved as you are. Each bacteria is the result of an unbroken succession of 100% successful ancestors, trillions of generations long, and each is the product of constant, hourly, adaptive pressure to maximize its fitness to its environment. Each bacteria is the best that evolution can do after several billion years.

In every biological way we can measure, bacteria are the main event of life on earth. If an exploring probe from another galaxy landed on earth and began a life census, they would quickly and correctly deduce that after 3.8 billion years of evolution this planet was still in the Age of Bacteria.

As far as we can tell most bacteria are the same as they were a billion years ago. That means for the bulk of life on earth, the main event has not been a steady increase in complexity, but a remarkable conservation of simplicity. After billions of years of steady work, evolution produces mostly more of the same.

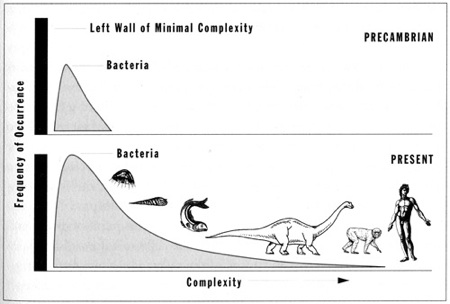

It would entirely fair, then, to represent the long arc of evolution much as Stephen Gould has in this diagram above. As he renders it, the main event in both epochs – early and late — is the reigning pyramid of simple bacteria. In the middle of the sloping bulge are the mid-size organisms – the grasses, plants, fungi, algae, coral of the world. Not as simple or collectively massive as bacteria, these semi-simple organisms nonetheless constitute the bulk of life that interacts with us directly. They don’t form the infrastructure; this middle life forms the architecture. These simpler organisms introduce the change and variety in our lives. At the bottom edge, extending to the right, is the thinning, minor “long tail” of the more complex organisms These are the scarce charismatic organisms that star in nature shows. The message of this sketch, Gould concludes, is that “the outstanding feature of life’s history has been the stability of its bacterial mode over billions of years!” Not just bacteria, he reminds. Horseshoe crabs, crocodiles, and the coelacanth are famously stable in geological time.

But it takes a peculiar kind of blindness to see stability as the chief event in this movie. In scene one there is a nice hill of simple bacteria. In scene two, the hill has enlarged and grown a long tail of weird, complicated, improbable beings. They seem to come out of nowhere, and because of their complexity, must be more surprising and unexpected that the arrival of life itself. Sure, in a quantitative, almost autistic kind of reckoning, nothing much happened because the hill of bacteria is basically the same. This is true! The veracity of this observation is the foundation of the orthodox arguments against a trend for complexity in evolution. The drive in evolution is to keep things simple and thus the same.

Everything is the same, except for, well,,,, the addition a few little meaningful details. This blindness reminds me of a quip by Mark Twain on the consequential difference “between lightning and a lightning bug.” Apes, proto-humans, and humans are all basically the same. Nothing really happened between scene one, Homo erectus and scene two, Homo sapiens. The genes between the two are likely to be 99.99% conserved. When the long thin tail of language appeared in one ape, it was a minor alteration compared to the bulk of everything else about apes that remained stable. Yet, how that additional ‘bug” changes the meaning of everything else! So by the reckoning of the imagination, everything has changed over time.

To be fair to the orthodoxy, I don’t think Gould and others would deny the power of very small changes to have profound effects. The contention is whether these small changes (like occasional lines of increasing complexity) are the main event or simply a side effect of the main event. To rephrase Gould, are we really just witnessing random evolution away from simplicity, rather than random evolution directed towards complexity? If this kind of evolutionary complexity is a minority thread, then how can we claim it is being driven, pushed by evolution?

The evidence lies in deep history. The two scenes in the cartoon diagram above are not beginning and end, but the middle. Their action takes place in Act Two of a long movie, the great story of the cosmos. The first Act begins long before this sequence appears, and the third Act follows it. The long arc of complexity beings before evolution, then flows through the 3.8 billion years of life, and then continues into the technium.

Seth Lloyd, among others, suggests that effective complexity did not begin with biology, but began at the big bang. (I argue the same in different language in The Cosmic Origins of Extropy and The Cosmic Genesis of Technology.) In Lloyd’s informational perspective, fluctuations of quantum energy (or gravity) within the first fempto seconds of the cosmos caused matter and energy to clump. Amplified over time, with gravity, these clumps are responsible for the large-scale structure of galaxies – which in their organization display effective complexity. Recently three researchers (Ay, Muller, and Szkalo) determined that effective complexity is primed to generate phase changes. A phase change is the weird transformation, or restructuring, that the molecules of an element like water undergoes as it assumes three very different forms – solid ice, liquid, or steam. Systems (like a galaxy) can also exhibit phase changes, producing new informational organization with the same components.

In Lloyd’s scheme, “gravitational clumping supplies the raw material necessary for generating complexity,” which in turns generates new levels of effective complexity in the form of self-regulating atmospheric planets, life, mind and technology. “In terms of complexity, each successive revolution inherits virtually all the logical and thermodynamic depth of the previous revolution.” This ratcheting process keeps upping the effective complexity over deep time.

This slow ratchet of complexity preceded life. Effective complexity was imported from antecedent structures, such as galaxies and stars, that teetered on the edge of persistent disequilibrium. And as in the organizations before them, effective complexity accrues in an irreversible stack. Lloyd observers, “This initial revolution in information processing [in galaxies and clusters of galaxies] was followed by a sequence of further revolutions: life, sexual reproduction, brains, language…and whatever comes next.”

In 1995, two biologists, John Maynard Smith and Eors Szathmary, envisioned the major transitions in organic evolution as a set of ratcheting organizations of information flow. Their series of eight revolutionary steps in evolution began with “self-replicating molecules” transitioning to the more complex self-sustaining structure of “chromosomes.” Then evolution passed through the further complexifying change “from prokaryotes to eukaryotes” cell type and after a few more phase changes, the last transition moved it from language-less societies to those with language.

Each transition shifted the unit that replicated (and upon which natural selection worked). A first, molecules of nucleic acid duplicated themselves, but once they self-organized into a set of linked molecules, they replicated together as a chromosome. Now evolution worked on both nucleic acid and chromosomes. Later, these chromosomes, housed in primitive prokaryote cells like bacteria, joined together to form a larger cell (the component cells became organelles of the new), and now their information was structured and replicated via the complex eukaryote host cell (like an amoeba). Evolution began to work on three levels or organization; genes, chromosomes, cell. These first eukaryote cells reproduced by division on their own, but eventually some (like the protozoan Giardia) began to replicate sexually, and so now life required a diverse sexual population of similar cells to evolve. A new level of effective complexity was added: Natural selection began to operate on populations as well. Populations of early single-cell eukaryotes could survive on their own, but many lines self-assembled into multicellular organisms, and so replicated as an organism, like a mushroom, or seaweed. Now natural selection operated on multicelled creatures, in addition to all the lower levels. Some of these multicellular organisms (such as ants, bees, termites) gathered into superorganisms, and could only reproduce within a colony or society, and evolution emerged at the society level as well. Later language in human societies gathered individual ideas and culture into a global technium, and so humans and their technology could only prosper and replicate together, presenting another level for evolution and effective complexity.

At each escalating step, the logical and thermodynamical depth of the resulting organization increased. It became more difficult to compress the structure, and at the same time, it contained less randomness and less predictable order. Each upcreation was also irreversible. In general, multicellular lineages do not re-evolve into single cell organisms, sexual reproducers rarely evolve into parthenogens, social insects rarely unsocialize, and to the best of our knowledge, no replicator with DNA has ever given up genes. Nature will simplify, but it rarely devolves down a level. However nature is nothing but a collection of exceptions. There is no rule in biology that is not broken or hacked by some creature, somewhere. Yet here the trend is mainstream and representative of the mean. When life does complexify in levels, it does not retract.

Just to clarify: within a level of organization, trends are uneven. A movement toward larger size, or longer longevity, or higher metabolism, or even general complexity may be found only in a minority of species within a family, and the trend may be subject to reversal on average. So when biologists search for a measured increase in some body characteristic over evolutionary time they typically find patchy distribution as soon as their survey widens beyond a narrow taxonomic branch. Consistent directions of evolution are absent across unrelated subjects in similar epochs. The trend toward greater effective complexity is visible only in the accumulation of large-scale organizations over large-scale time. Complexification may not be visible with ferns, say, but it appears between ferns and flowering plants (recombining information via sexual fertilization).

We can take the second panel of Gould’s diagram as a wonderful and ideal illustration of this escalation. The long thin tail of increasing complexity is actually the trace of the major transitions in evolution. The shift to the right is a shift in the number of hierarchical levels that evolutionary information must flow through. Not every evolutionary line will proceed up the escalator (and why should they?), but those that do advance will unintentionally gain new powers of influence that can alter the environment far beyond them. And, as in a ratchet, once a branch of life moves up a level, it does not move back. In this way there is an irreversible drift towards greater effective complexity.

The arc of complexity flows from dawn of the cosmos and into life. Complexity theorist James Gardner calls this “the cosmological origins of biology.” But the arc continues through biology and now extends itself forward through technology. The very same dynamics that shape complexity in the natural world shape complexity in the technium.

Just as in nature, the number of simple manufactured objects continues to increase. Brick, stone and concrete are some of the earliest and simplest technologies, yet by mass they are the most common technologies on earth. And they compose some of the largest artifacts we make: cities and skyscrapers. Simple technologies fill the technium in the way bacteria fill the biosphere. There are more hammers made today than at any time in the past. Most of the visible technium is, at its core, non-complex technology.

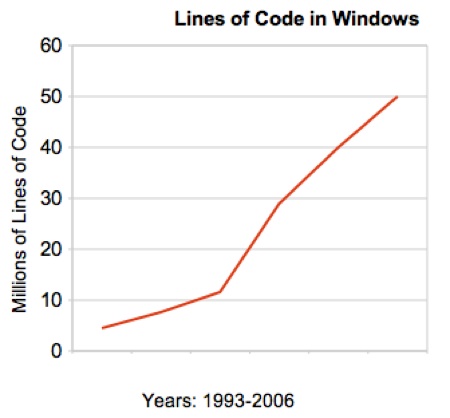

But as in natural evolution, a long tail of ever complexifying arrangements of information and materials fills our attention, even if they are small in mass. Indeed, demassification is one avenue of complexification. Complex inventions stack up information rather than atoms. The most complex technologies we make are also the lightest, least material. For instance, software in principle is weightless and disembodied. It has been complexifying at a rapid rate. The number of lines of code in a basic tool such as Microsoft’s Windows has increased ten fold in thirteen years. In 1993, Windows entailed 4-5 million lines of code. In 2006, Windows Vista contained 50 million lines of code. Each of those lines of code is the equivalent of a gear in a clock. The Windows OS is a machine with 50 million moving pieces.

Increasing complexity of software

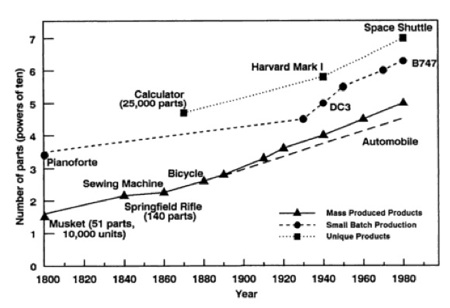

Throughout the technium, lineages of technology are restructured with additional layers of information to yield more complex artifacts. For the past two hundred years (at least) the number of parts in the most complex machines has been increasing. The diagram below is a logarithmic chart of the trends in complexity for mechanical apparatus. The first prototype turbo jet had several hundred parts, while a modern turbo jet, 30-50 times more powerful, has over 22,000 parts. The space shuttle has tens of millions of physical parts yet it contains most of its complexity in its software, which is not included in this assessment.

Complexity of manufactured machines, 1800 – 1980

We can watch our culture complexify right before our eyes. As an almost trivial yet telling example, author Steven Johnson has noted how the plot lines of movies and TV have become more complex within his own lifetime. The number of characters involved in a story has doubled, the number of twists increased, the frequent insertion of complicated literary devices such as flashbacks has increased the levels of engagement. If a movie is a program (as in a computer program), then over time these narratives accumulate the equivalent of subroutines, parallel processing, and recursive loops, elevating the story into a more adaptive, living thing.

Our refrigerators, cars, even doors and windows are more complex than two decades ago. The strong trend for complexification in the technium provokes the question, how complex can it get? Where does the long arc of complexity take us?

Three scenarios of complexity for the next 1,000 years:

Scenario #1. As in nature, the bulk of technology remains simple, basic, and primeval because it works. And it works well as a foundation for the thin long tail of complex technology built upon it. If the technium is an ecosystem of technologies, then most of it will remain highly evolved as microscopic and plant equivalents: brick, wood, hammers, copper wires, electric motors and so on.

Scenario #2. Complexity, like all other factors in growing systems, plateaus out at some point, and some other quality we had not noticed earlier takes its place as the prime observable trend. In other words, complexity may simply be the rosy-colored lens we see the world through at this moment, the metaphor of the era, when in reality it is a reflection of us rather than evolution.

Scenario #2A. Complexity plateaus because we can’t handle it. While we could make technology run faster, smaller, denser, more complicated forever, we don’t want to beyond some point because it no longer matches our human scale. We could make living nano-scale keyboards, but they won’t fit our fingers.

Scenario #3. There is no limit to how complex things can get. Everything is complexifying over time, headed toward that omega point of ultimate complexity.

If I had to, I would bet, perhaps surprisingly, on scenario #1. The bulk of technology will remain simple or semi-simple, while a smaller portion will continue to complexify greatly. I expect our cities and homes a thousand years hence to be recognizable, rather than unrecognizable. As long as we inhabit bodies approximately our size – a few meters and 50 kilos — the bulk of the technology that will surround us need not be crazily more complex. And there is good reason to expect we’ll remain the same size, despite intense genetic engineering and downloading to robots. Our body size is weirdly almost exactly in the middle of the size of the universe. The smallest things we know about are approximately 30 orders of magnitude smaller than ourselves, and the largest structures in the universe are about 30 orders of magnitude bigger. We inhabit a middle scale that is sympathetic to sustainable flexibility in the universe’s current physics. Bigger bodies encourage rigidity, smaller ones encourage empheralization. As long as we own bodies – and what sane being does not want to be embodied? – the infrastructure technology we already have will continue (in general) to work. Roads of stone, buildings of modified plant material and earth, not that different from our cities and homes 2,000 years ago. Some visionaries might imagine complex living buildings in the future, for instance, but most average structures are unlikely to be more complex than the formerly living plants we already use. They don’t need to. I think there is a “complex enough” restraint. Technologies need not complexify to be useful in the future. Danny Hillis, computer inventor, once confided to me that he believed that there’s a good chance that 1,000 years from now computers might still be running programming code from today, say a unix kernel and TCP/IP. They almost certainly will be binary digital. Like bacteria, or cockroaches, these simpler technologies remain simple, and remain viable, because they work. They don’t have to get more complex.

At the same time, there is no bound for the most complex things we will make. We’ll boggle ourselves with new complexity in many directions. This will complexify our lives further, but we’ll adapt to it. In fact, ongoing complexification – even in the thin bleeding edge – suggests a fourth scenario.

Scenario #4. Complexity gets more complex. We make or discover technological systems that require new, more complex definitions of complexity. Not merely, as I quoted Seth Lloyd above, because our definitions of complexity indicate ignorance, but because in fact we are finding/making things more complex and need new definitions. “Logical depth” won’t be enough for a definition as we keep making software more complex. As an example in the financial world, the invention of stock ownership added complexity to a marketplace. Then we invented making bets on those shares (stocks), and then we invented making bets on those bets (a derivative) and then bets on the bet on the bet (second order derivative), each layer of relation between bits adding complexity, and requiring new ideas about complexity. We keep adding new levels and ways to complexify our economy, till the complexity exceeds our ability to measure it (or understand it). Over time we are increasing the complexity of complexity itself, by inventing/finding new ways for bits of information to relate to other bits. It is those intangible relations that form complexity. As far as we can see, there is no limit to the new ways bits can relate. Keep in the mind the “spooky at a distance” entanglement between quantum bits to understand how complicated complexity could get. In a thousand years, the concept of “complexity” will probably be as dead as the old notion of “metaphysics” because it will have turned out to be too blunt for the dozens if not hundreds of concepts it probably contains.

A movement towards complexity is what I call the “least objectionable theory” of the universe. Not everyone agrees with the trend, but not everyone agrees on anything at this scale. However fewer people in both science and faith disagree about the large scale movement toward greater complexity (whatever that is) over time. Defined as an irreversible escalation of increasing relations among the information flowing through structures, then complexity is rising.

The great “least objectionable” story so far: The arc of complexity begins in the ultimate simplicity of the big bang creation of something/nothing, and steadily sails through the universe, pushing most of it slowly towards complexity in the news levels of life, while at a few edges it accelerates through technology, making complexity itself ever more complex.